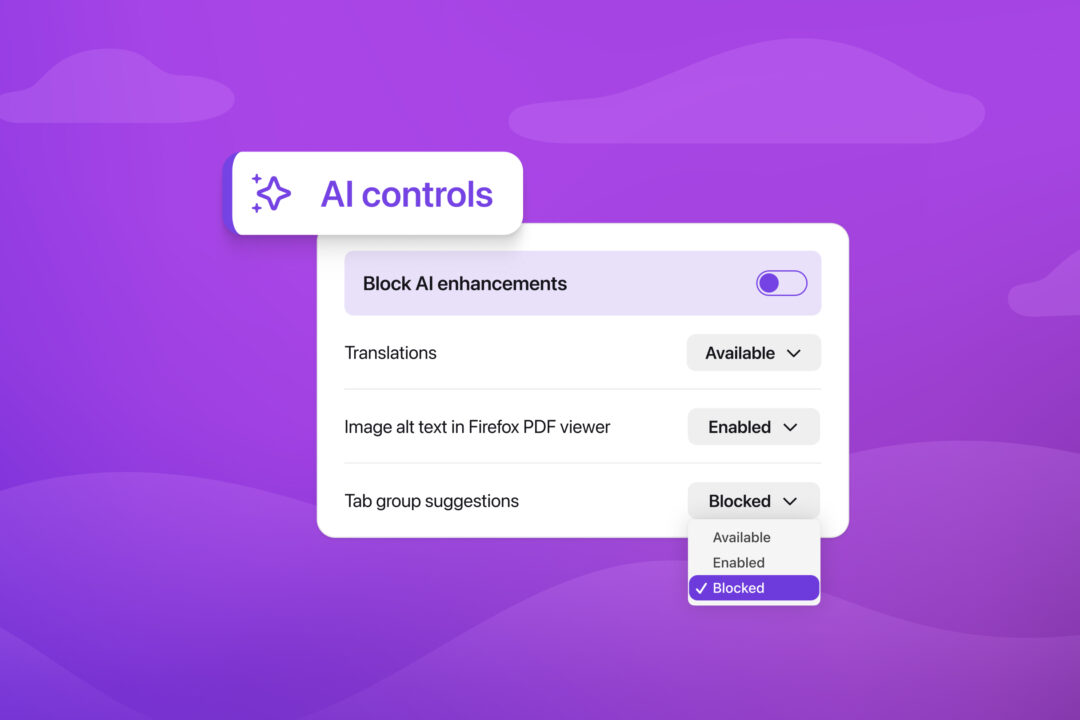

Starting with Firefox 148, which rolls out on Feb. 24, you’ll find a new AI controls section within the desktop browser settings. It provides a single place to block current and future generative AI features in Firefox.

They actually listened to the community, thats very nice.

To be fair, their reduced focus and the potential pace improvement through LLM assisted coding might cancel each other out. I wouldn’t be surprized if the resulting pace change is net zero or better.

That said: I like Firefox local translations, but haven’t found a use case for its other AI features yet.

Have we actually seen any evidence that LLM’s increase the pace of coding? Because in most of the reports I’ve seen there is no measurable difference even when users feel like they’re faster

I can get LLM to write prototypes and demos in the background while I am working on other parts of the code at the same time.

with the right prompt, I can generate and scaffold documentation pages, which I may not have time to do so.

Things are happening in the background and I get more done.

I feel like I am faster?

Feeling like you’re faster just because you can generate easy, boilerplate code doesn’t actually mean you’re faster if it spits out junk or takes longer to debug/integrate that code, or if tasks require more complex work that LLMs are bad at.

I just wanted to see some concrete stats given how much everyone is implementing it and hyping it up, as anecdotal evidence is easily biased by shiny new toy syndrome.

How about giving it a try.

If you happen to have work that is primarily text based (like programming).

Pick something that you have expertise on, and see LLM can help to automate it. Usually this requires using LLM with tooling that provides agentic capability (if you are into this, checkout Opencode / Kilocode / Roo code).

Once you get a hang on it, try to mix it up with skill gap that you are less knowledgeble of, but have an idea of what you are trying to accomplish. This is what fascinate me.

Don’t jump straight into vibe coding or domain with 0 knowledge and expect good and repeatable result (not now at least).

In my case, I am a Web UI developer, I have quite an extensive knowledge on that. For serious work, I can provide (or get it to reference) my requirement, so that it create close to 85-95% of what I need. I can easily verify and make modification. If not, just delete the entire work, refine my original idea and ask LLM to try again.

But occassionaly I need to test my work on native iOS and Android device. These are not my expertise and I have no interest in learning them yet. In the past, I will either get help from someone to build a prototype for me, or learn and read docs to create a lower quality outcome.

Now, i ask LLM to create a prototype in 5 minutes. Then add different scenario and features in the next 5 minutes, allowing me to test my work and iterate much faster. If I really want to do that myself, it may take a day or 2, time that I could have spent improving my own work.

These are throw away work that will only be used a few times. I don’t care about maintaining or debugging it.

Another case is again report and visualization generation for data. Instead of reading several pages of documentation on how to create these visualization and trying to architect the data flow. Now i just ask LLM to “given this data that shows the relationship between x and y, create a report with visualizatiom focus on xyz”.

Nowasday LLM produce work that already surpass what a junior-mid developer can produce. And if I am not satisfied with the work, I will just delete the entire work and redo. No hard feeling, no need to convince the orher person that their time has just gone to waste.

The only worry I have now is when people stopped learning because of this. And that companies stop hiring new junior developers.

Without junior developers, there will be no senior developers. Or maybe LLM will eventually make expert obsolete? Right now I don’t think it is possible yet.

There are some concerns but yes, development generally accelerates: https://arxiv.org/abs/2507.03156

They meant with the cleaning up after it.

So they AI summarized other people’s work.

And later acknowledge there are major gaps in methodology. I wouldn’t be linking to this as proof of accelerated dev imho.

What ive read, and what is accurate according to my experience, is that its very fast for creating smaller pieces of code, like scripts, foundations, docs etc. So with a small context, its great.

As soon as you start to ask it to add features to a larger code base, it will mess things up and add code that is not necessary, and add extra complexity. And it will now be slower to use Ai than before, because now you are spending time iterating and correcting, and you may not even get a working solution at all.

Thats my experience with Ai.

I think it does speed things up, since it can generate syntax quickly, but its not very good code and leads to a big mess. Eventually you want to rewrite from scratch yourself.

But I think it helps for sure. The alternative to find all syntax yourself and write it correctly is very time cons unik, although you also gain a much better understanding by doing that.